In the contemporary markets, artificial intelligence has become the most reliable decision-maker. In credit scoring, medical diagnostics as well as trading strategies, models more and more influence results that impact real capital as well as real lives. But there is a tension which remains to be resolved under this advancement. The bigger models get the bigger the data they need. And the more they eat, the more difficult it is to check their actions without revealing the information upon which they are constructed. This tension between confidentiality and transparency is no longer imaginary. It is structural.

The Trust Gap in Between Data and Decisions

With each technological advance a new trust gap emerges. Custodians were used in traditional finance. In cryptocurrency, it was middlemen. In AI, models that are trained using opaque data decide on matters which cannot be directly verified. Institutions are seeking to know that models are just, concomitant and precise. Users want privacy. Regulators desire responsibility. These demands often collide.

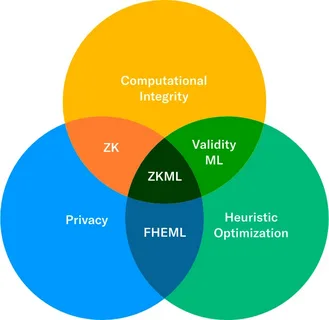

At this point, ZKML (Zero-Knowledge Machine Learning) is redefining the discussion. Instead of dictating stakeholders believing model owners or auditors, it proposes cryptographic evidence as a third party. It is possible to prove that it was done on the correct data, on valid data, and on the predefined rules without disclosing the data by example of a model. This change is of great importance not only in the field of engineering. It redefines trust as a mathematical feature but not an institutional promise.

Why Verifiability is Important than Model Accuracy

Markets are obsessive in terms of performance metrics. The headlines are full of accuracy rates, benchmark scores and training efficiency. But history demonstrates that systems are not false on account of their inaccuracy but due to their unverifiability. Confidence is easily lost when results are unable to be justified or justified.

ZKML (Zero-Knowledge Machine Learning) is a solution to this blind spot, as it separates performance and disclosure. It is possible to establish the validity of prediction, without providing inputs, weights or proprietary structures, which follows the logic of the model. In the case of financial institutions, it can provide a path in order to prove compliance without losses in intellectual property. To the users it gives them confidence that there was no arbitrary decision.

Verifiability is a more perceived risk in behavioral finance terms. The investors and counterparties are more comfortable to participate in systems that they can audit, indirectly. This reduces the trust premium that is embedded in AI-driven services in the long run.

Privacy: An Economic Benefit, not a Limit

The issue of privacy is commonly understood as a trade-off. Greater privacy implies reduced utility. The greater the lack of transparency, the greater the risk. This framing lacks one important aspect. Privacy is a competitive advantage in a competitive market. Companies protect information since it is an asset. People protect information since it is personal. The difficulty is making cooperation possible in spite of exposure.

ZKML (Zero-Knowledge Machine Learning) enables the models to work with sensitive data and demonstrate accuracy externally. This would allow shared intelligence without shared raw data. Models in healthcare have the capability of validating the diagnosis in institutions where patient information is not transferred. Finance Risk models can be stressed without disclosure of position.

This increases the applications of AI in the market. Whole industries that were previously limited by the law of data protection or reputation risk can now be involved. The outcome is not only the improved privacy, but wider usage.

Psychology of investors and black boxes fear

The black box problem is one of the silent issues of market doubts about AI. In the case of losses, explanations are important. The stakeholders desire sensual narratives. Any system, which is not explicable, turns into a scapegoat.

As it allows provable execution, ZKML (Zero-Knowledge Machine Learning) turns black boxes into gray boxes. The inner workings could be concealed, yet their quality could be proven. This is a subtle but strong differentiation. It transforms suspicions to proposed order.

Similar to audited financial statements, zero knowledge proofs do not reflect all the parameters. They merely determine that there was adherence to rules. This in the long run makes AI infrastructure and not a speculation.

Trust in the Data Economy: Attaining Scale

The challenge in the long-term is not creating smarter models. It is scaling trust in millions of interactions. The prices of mistrust increase exponentially as AI gets integrated into payments, identity systems, and market infrastructure. A single failure can spread among the ecosystems.

ZKML (Zero-Knowledge Machine Learning) provides a way to trust modules. Independent verification of proofs can be achieved, both cross-chain and cross-platform, without the use of centralized auditors. This is a reflection of the development of cryptographic finance itself, in which the piece of verification replaced the reliance.

This reduces coordination costs in terms of economics. The parties need not have meaningful relationships or even legal frameworks to cooperate. They need proofs. and evidence is more apt than faith.

Conclusion

The capabilities of technological revolutions are not often used to define it. Their definition is determined by those things that people can trust. AI has become influential enough to require new mechanisms of trust rather than improved marketing. ZKML (Zero-Knowledge Machine Learning) is a silent yet radical re-evaluation of the co-existence of intelligence, privacy and verification.

Instead of making a trade-off between transparency and confidentiality, it entirely ambiguates it. There can be very powerful models, which are not invasive. Decisions can be confided in without being revealed. And then, in the long run the systems that survive will not be the ones that know most, but the ones that can demonstrate that they do the thing that they are doing and that are only aware of what they know sufficiently.